Multi-Thread Redgate DLM Deployments

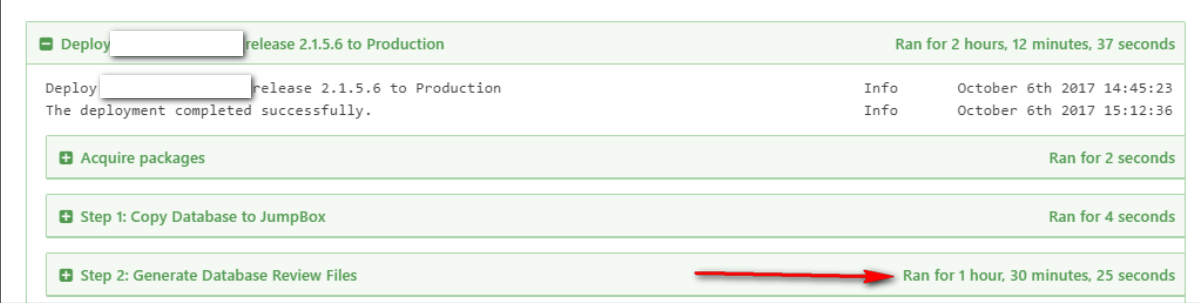

The time had finally come. It was time to run the Redgate automated database deployment process in production for the first time. All we had to do was click the deploy button in Octopus Deploy, and we would be on our way. The button was pressed, and we were on our way. Now we just needed to wait. And wait. And wait.

For 90 minutes.

90 minutes just to generate the delta scripts. It took another 25 minutes to deploy those scripts.

Nothing was frozen. Something was happening. Octopus Deploy showed this through the logs. It just took forever.

Our Process

This isn't my first rodeo with automating database deployments. I know it shouldn't take 2 hours, 12 minutes and 37 seconds to deploy to production. But the previous deployments to production were very simple; a single database package is deployed to a single database. But this was a multi-tenant system. And the decision was made long ago each client would get their own database. Because of that, this was the process put together for automated database deployments.

- Download database package to the Octopus Deploy Tentacle

- Query a configuration database to get the list of client databases. In case the logic ever changed we wrapped that inside a sproc called Octopus.GetActiveDatabases.

- Loop through the database list and generate a unique deployment for each one. Create an Octopus Deploy Artifact containing the "changes.html" for each database. If there are 50 customer databases that would means 50 changes.html files.

- Manual Intervention to review the changes.html for each database

- Assuming the manual intervention is approved, loop through the database list again and deploy those delta scripts.

You might be asking, why not just generate a single database release and deploy that to all the customer databases. Fair enough question. The problem is this is the first time this set of databases has had any automated process for deployments. Trust in the process needs to be built. A great way to do that is to provide an audit of all the changes for each database. To compound that, there is most likely drift between the customer databases. Nothing significant or the code wouldn't work, but probably a sproc has been changed to return the top 300 rows instead of the top 1000 rows or something minor like that.

The Performance Bottleneck

Generating the deployment script for a single database using Redgate DLM Automation typically takes 1-2 minutes. It depends on the number of objects in the database. I've seen some take as long as 5 minutes. But that database had 4000 objects or something ridiculous like that.

Imagine if you had 50 databases. At two minutes per database, you are looking at 100 minutes. But that is if you were processing only a single database at a time. Which we were. Doesn't exactly scale all that well, does it? Explains why it took 90 minutes just generate all the delta scripts.

Multi-Threading PowerShell

For those of you familiar with Octopus Deploy, you know everything it does in the tentacle is done through a PowerShell script. The database deployment was no different. The version 1 of the PowerShell script I wrote was doing a simple ForEach loop when looping through the list of databases to deploy to. There are a lot of similarities between .NET and PowerShell. I know .NET supports multi-threading, and it turns out, so does PowerShell. To a degree.

My first instinct was to use a simple ForEach -Parallel loop. That did not work, the ForEach -Parallel did not like all the Write-Hosts I had throughout my script. I could remove those but I know the Redgate DLM Automation PowerShell cmdlets have those as well. Back to StackOverflow I went. What I landed on was making use of PowerShell's Job functionality.

PowerShell Jobs

PowerShell jobs are pretty straight-forward. You create a script block containing the script you want to multi-thread. The script accepts the necessary variables to run it.

In this case, I wanted to generate a set of release scripts for each database I wanted to deploy to. For this example I am going to modify the existing Octopus Deploy Step provided by Red Gate to generate the database release.

First, up is connecting to a database containing a list of databases to deploy to. What I did was use the built-in variables from the step template $DLMTargetDatabaseServer and $DLMAutomationTargetDatabaseName to store the stored procedure Octopus.GetActiveDatabases. That stored procedure returns the database server and database name to deploy to.

if ([string]::IsNullOrWhiteSpace($DLMAutomationTargetUsername))

{

$connectionString = "Server = $DLMAutomationTargetDatabaseServer; Database = $DLMAutomationTargetDatabaseName; Integrated Security=true;"

Write-Host "UserName not present using integrated security"

}

else

{

$connectionString = "Server = $DLMAutomationTargetDatabaseServer; Database = $DLMAutomationTargetDatabaseName;User Id = $DLMAutomationTargetUsername;Password=$DLMAutomationTargetPassword"

Write-Host "UserName present, using SQL Authentication"

}

$sqlConnection = New-Object System.Data.SqlClient.SqlConnection

$sqlConnection.ConnectionString = $connectionString

$sqlCmd = New-Object System.Data.SqlClient.SqlCommand

$sqlCmd.CommandText = 'Octopus.GetActiveDatabases'

$sqlCmd.Connection = $sqlConnection

$sqlAdapter = New-Object System.Data.SqlClient.SqlDataAdapter

$sqlAdapter.SelectCommand = $sqlCmd

$dataSet = New-Object System.Data.DataSet

$sqlAdapter.Fill($dataSet)

Write-Host "Connecting to $DLMAutomationTargetDatabaseServer.$DLMAutomationTargetDatabaseName to get the databases to deploy to"

$databasesToDeployTo = $dataSet.Tables[0]

$databaseCount = $databasesToDeployTo.Rows.Count

Write-Host "Found $databaseCount databases to deploy to."

Next up is to define the script block that will do the actual work.

$block = {

Param($record,

$databaseStatePath,

$DLMAutomationTargetUsername,

$DLMAutomationTargetPassword,

$DLMAutomationIgnoreStaticData,

$DLMAutomationTransactionIsolationLevel,

$DLMAutomationFilterPath,

$DLMAutomationCompareOptions,

$packageExtractPath,

$projectId,

$releaseNumber,

$nugetPackageId)

$dlmDatabaseServer = $record.ServerName

$dlmDatabaseName = $record.DatabaseName

Write-Host "Creating release files for $dlmDatabaseServer $dlmDatabaseName"

$targetDB = New-DlmDatabaseConnection -ServerInstance $dlmDatabaseServer -Database $dlmDatabaseName -Username $DLMAutomationTargetUsername -Password $DLMAutomationTargetPassword

$ignoreStaticData = $DLMAutomationIgnoreStaticData -eq "True"

# Create database deployment resources from the NuGet package to the database

$release = New-DlmDatabaseRelease -Target $targetDB `

-Source $databaseStatePath `

-TransactionIsolationLevel $DLMAutomationTransactionIsolationLevel `

-IgnoreStaticData:$ignoreStaticData `

-FilterPath $DLMAutomationFilterPath `

-SQLCompareOptions $DLMAutomationCompareOptions

$exportPath = Join-Path (Join-Path (Join-Path (Join-Path $packageExtractPath $projectId) $releaseNumber) $nugetPackageId) $dlmDatabaseName

Write-Host "Exporting results to $exportPath"

# Export the deployment resources to disk

$release | Export-DlmDatabaseRelease -Path $exportPath

}

Then I just needed to call that script block from a foreach loop that looped through the database list.

# Remove any of the preceding jobs

Get-Job | Remove-Job

$maxThreads = 4

#Start the jobs by looping through the database to deploy to list. Max 4 jobs are running simultaneously.

foreach($record in $databasesToDeployTo)

{

While ($(Get-Job -state running).count -ge $MaxThreads){

Start-Sleep -Milliseconds 3

}

# Call out to the script block and send in all the arguments. The majority of these arguments come from the default

Start-Job -Scriptblock $block -ArgumentList @($record, $databaseStatePath, $DLMAutomationTargetUsername, $DLMAutomationTargetPassword, $DLMAutomationIgnoreStaticData, $DLMAutomationTransactionIsolationLevel, $DLMAutomationFilterPath, $DLMAutomationCompareOptions, $packageExtractPath, $projectId, $releaseNumber, $nugetPackageId)

}

#Wait for all jobs to finish

While ($(Get-Job -State Running).count -gt 0){

start-sleep 1

}

# Write the results to the host so Octopus Deploy can pick them up

foreach($job in Get-Job){

$info= Receive-Job -Id ($job.Id)

Write-Host $info

}

# Remove any lingering jobs

Get-Job | Remove-Job

Finally, we need to create Octopus Artifacts for each database release. I couldn't do this in the script block because New-OctopusArtifact is not a server installed cmdlet like New-DlmDatabaseRelease is. New-OctopusArtifact only exists in the context of Octopus Deploy.

foreach($record in $databasesToDeployTo)

{

$dlmDatabaseServer = $record.ServerName

$dlmDatabaseName = $record.DatabaseName

$exportPath = Join-Path (Join-Path (Join-Path (Join-Path $packageExtractPath $projectId) $releaseNumber) $nugetPackageId) $dlmDatabaseName

$changesHtmlFileName = "$dlmDatabaseServer-$dlmDatabaseName-ProposedChanges.html"

Write-Host "Creating the artifact $changesHtmlFileName"

New-OctopusArtifact -Path "$exportPath\Reports\Changes.html" -Name $changesHtmlFileName

}

Here is the entire PowerShell script for Octopus Deploy to use. I would suggest installing the Create Database Release step template provided by Redgate and then cloning that. That way you get all the variables and what not.

# Version date: 23rd January, 2017

$ErrorActionPreference = 'Stop'

$VerbosePreference = 'Continue'

# Set process level FUR environment

$env:REDGATE_FUR_ENVIRONMENT = "Octopus Step Templates"

# Check if DLM Automation is installed.

$dlmAutomationModule = Get-Module -ListAvailable -Name DLMAutomation

if ($dlmAutomationModule -eq $null) {

throw "Cannot find DLM Automation on your Octopus Tentacle. If DLM Automation is installed, try restarting the Tentacle service for it to be detected."

}

$currentVersion = $dlmAutomationModule.Version

$minimumRequiredVersion = [version] '2.0.0.0'

if ($currentVersion -lt $minimumRequiredVersion) {

throw "This step requires DLM Automation version $minimumRequiredVersion or later. The current version is $currentVersion. The latest version can be found at http://www.red-gate.com/dlmas/download"

}

# Check the parameters.

if ([string]::IsNullOrWhiteSpace($DLMAutomationTargetDatabaseName)) { throw "You must enter a value for 'Target database name'." }

if ([string]::IsNullOrWhiteSpace($DLMAutomationTargetDatabaseServer)) { throw "You must enter a value for 'Target SQL Server instance'." }

if ([string]::IsNullOrWhiteSpace($DLMAutomationNuGetDbPackageDownloadStepName)) { throw "You must enter a value for 'Database package step'." }

if ([string]::IsNullOrWhiteSpace($DLMAutomationFilterPath)) { $DLMAutomationFilterPath = $null }

if ([string]::IsNullOrWhiteSpace($DLMAutomationCompareOptions)) { $DLMAutomationCompareOptions = $null }

$queryBatchTimeout = 30

if (![string]::IsNullOrWhiteSpace($DLMAutomationQueryBatchTimeout)) {

if (![int32]::TryParse($DLMAutomationQueryBatchTimeout , [ref]$queryBatchTimeout )) {

throw 'The query batch timeout must be a numerical value (in seconds).'

}

if ($queryBatchTimeout -lt 0) {

throw "The query batch timeout can't be negative."

}

}

# Get the NuGet package installation directory path.

$packageExtractPath = $OctopusParameters["Octopus.Action[$DLMAutomationNuGetDbPackageDownloadStepName].Output.Package.InstallationDirectoryPath"]

if($packageExtractPath -eq $null) {

throw "The 'Database package download step' is not a 'Deploy a NuGet package' step: '$DLMAutomationNuGetDbPackageDownloadStepName'"

}

# Directory containing the extracted database package.

$databaseStatePath = Join-Path -path $packageExtractPath -childPath "db\state"

if ([string]::IsNullOrWhiteSpace($DLMAutomationTargetUsername))

{

$connectionString = "Server = $DLMAutomationTargetDatabaseServer; Database = $DLMAutomationTargetDatabaseName; Integrated Security=true;"

Write-Host "UserName not present using integrated security"

}

else

{

$connectionString = "Server = $DLMAutomationTargetDatabaseServer; Database = $DLMAutomationTargetDatabaseName;User Id = $DLMAutomationTargetUsername;Password=$DLMAutomationTargetPassword"

Write-Host "UserName present, using SQL Authentication"

}

$sqlConnection = New-Object System.Data.SqlClient.SqlConnection

$sqlConnection.ConnectionString = $connectionString

$sqlCmd = New-Object System.Data.SqlClient.SqlCommand

$sqlCmd.CommandText = 'Octopus.GetActiveDatabases'

$sqlCmd.Connection = $sqlConnection

$sqlAdapter = New-Object System.Data.SqlClient.SqlDataAdapter

$sqlAdapter.SelectCommand = $sqlCmd

$dataSet = New-Object System.Data.DataSet

$sqlAdapter.Fill($dataSet)

Write-Host "Connecting to $DLMAutomationTargetDatabaseServer.$DLMAutomationTargetDatabaseName to get the active databases to deploy to"

$databasesToDeployTo = $dataSet.Tables[0]

$databaseCount = $databasesToDeployTo.Rows.Count

Write-Host "Found $databaseCount databases to deploy to"

$block = {

Param($record,

$databaseStatePath,

$DLMAutomationTargetUsername,

$DLMAutomationTargetPassword,

$DLMAutomationIgnoreStaticData,

$DLMAutomationTransactionIsolationLevel,

$DLMAutomationFilterPath,

$DLMAutomationCompareOptions,

$packageExtractPath,

$projectId,

$releaseNumber,

$nugetPackageId)

$dlmDatabaseServer = $record.ServerName

$dlmDatabaseName = $record.DatabaseName

Write-Host "Creating release files for $dlmDatabaseServer $dlmDatabaseName"

$targetDB = New-DlmDatabaseConnection -ServerInstance $dlmDatabaseServer -Database $dlmDatabaseName -Username $DLMAutomationTargetUsername -Password $DLMAutomationTargetPassword

$ignoreStaticData = $DLMAutomationIgnoreStaticData -eq "True"

# Create database deployment resources from the NuGet package to the database

$release = New-DlmDatabaseRelease -Target $targetDB `

-Source $databaseStatePath `

-TransactionIsolationLevel $DLMAutomationTransactionIsolationLevel `

-IgnoreStaticData:$ignoreStaticData `

-FilterPath $DLMAutomationFilterPath `

-SQLCompareOptions $DLMAutomationCompareOptions

$exportPath = Join-Path (Join-Path (Join-Path (Join-Path $packageExtractPath $projectId) $releaseNumber) $nugetPackageId) $dlmDatabaseName

Write-Host "Exporting results to $exportPath"

# Export the deployment resources to disk

$release | Export-DlmDatabaseRelease -Path $exportPath

}

# Remove any of the preceding jobs

Get-Job | Remove-Job

$maxThreads = 4

#Start the jobs by looping through the database to deploy to list. Max 4 jobs are running simultaneously.

foreach($record in $databasesToDeployTo)

{

While ($(Get-Job -state running).count -ge $MaxThreads){

Start-Sleep -Milliseconds 3

}

# Call out to the script block and send in all the arguments. The majority of these arguments come from the default

Start-Job -Scriptblock $block -ArgumentList @($record, $databaseStatePath, $DLMAutomationTargetUsername, $DLMAutomationTargetPassword, $DLMAutomationIgnoreStaticData, $DLMAutomationTransactionIsolationLevel, $DLMAutomationFilterPath, $DLMAutomationCompareOptions, $packageExtractPath, $projectId, $releaseNumber, $nugetPackageId)

}

#Wait for all jobs to finish

While ($(Get-Job -State Running).count -gt 0){

start-sleep 1

}

# Write the results to the host so Octopus Deploy can pick them up

foreach($job in Get-Job){

$info= Receive-Job -Id ($job.Id)

Write-Host $info

}

# Remove any lingering jobs

Get-Job | Remove-Job

# Create the artifacts so someone can review them

foreach($record in $databasesToDeployTo)

{

$dlmDatabaseServer = $record.ServerName

$dlmDatabaseName = $record.DatabaseName

$exportPath = Join-Path (Join-Path (Join-Path (Join-Path $packageExtractPath $projectId) $releaseNumber) $nugetPackageId) $dlmDatabaseName

$changesHtmlFileName = "$dlmDatabaseServer-$dlmDatabaseName-ProposedChanges.html"

Write-Host "Creating the artifact $changesHtmlFileName"

New-OctopusArtifact -Path "$exportPath\Reports\Changes.html" -Name $changesHtmlFileName

}

Next is the script to deploy said releases. It is almost the same as before, but this time the script block will call the Import-DlmDatabaseRelease and Use-DlmDatabaseRelease cmdlets.

# Version date: 23rd January, 2017

$ErrorActionPreference = 'Stop'

$VerbosePreference = 'Continue'

# Set process level FUR environment

$env:REDGATE_FUR_ENVIRONMENT = "Octopus Step Templates"

# Check if DLM Automation is installed.

$dlmAutomationModule = Get-Module -ListAvailable -Name DLMAutomation

if ($dlmAutomationModule -eq $null) {

throw "Cannot find DLM Automation on your Octopus Tentacle. If DLM Automation is installed, try restarting the Tentacle service for it to be detected."

}

$currentVersion = $dlmAutomationModule.Version

$minimumRequiredVersion = [version] '2.0.0.0'

if ($currentVersion -lt $minimumRequiredVersion) {

throw "This step requires DLM Automation version $minimumRequiredVersion or later. The current version is $currentVersion. The latest version can be found at http://www.red-gate.com/dlmas/download"

}

# Check the parameters.

if ([string]::IsNullOrWhiteSpace($DLMAutomationTargetDatabaseName)) { throw "You must enter a value for 'Target database name'." }

if ([string]::IsNullOrWhiteSpace($DLMAutomationTargetDatabaseServer)) { throw "You must enter a value for 'Target SQL Server instance'." }

if ([string]::IsNullOrWhiteSpace($DLMAutomationNuGetDbPackageDownloadStepName)) { throw "You must enter a value for 'Database package step'." }

if ([string]::IsNullOrWhiteSpace($DLMAutomationCompareOptions)) { $DLMAutomationCompareOptions = $null }

$queryBatchTimeout = 30

if (![string]::IsNullOrWhiteSpace($DLMAutomationQueryBatchTimeout)) {

if (![int32]::TryParse($DLMAutomationQueryBatchTimeout , [ref]$queryBatchTimeout )) {

throw 'The query batch timeout must be a numerical value (in seconds).'

}

if ($queryBatchTimeout -lt 0) {

throw "The query batch timeout can't be negative."

}

}

# Get the NuGet package installation directory path.

$packageExtractPath = $OctopusParameters["Octopus.Action[$DLMAutomationNuGetDbPackageDownloadStepName].Output.Package.InstallationDirectoryPath"]

if($packageExtractPath -eq $null) {

throw "The 'Database package download step' is not a 'Deploy a NuGet package' step: '$DLMAutomationNuGetDbPackageDownloadStepName'"

}

# Directory containing the extracted database package.

$databaseStatePath = Join-Path -path $packageExtractPath -childPath "db\state"

if ([string]::IsNullOrWhiteSpace($DLMAutomationTargetUsername))

{

$connectionString = "Server = $DLMAutomationTargetDatabaseServer; Database = $DLMAutomationTargetDatabaseName; Integrated Security=true;"

Write-Host "UserName not present using integrated security"

}

else

{

$connectionString = "Server = $DLMAutomationTargetDatabaseServer; Database = $DLMAutomationTargetDatabaseName;User Id = $DLMAutomationTargetUsername;Password=$DLMAutomationTargetPassword"

Write-Host "UserName present, using SQL Authentication"

}

$sqlConnection = New-Object System.Data.SqlClient.SqlConnection

$sqlConnection.ConnectionString = $connectionString

$sqlCmd = New-Object System.Data.SqlClient.SqlCommand

$sqlCmd.CommandText = 'Octopus.GetActiveDatabases'

$sqlCmd.Connection = $sqlConnection

$sqlAdapter = New-Object System.Data.SqlClient.SqlDataAdapter

$sqlAdapter.SelectCommand = $sqlCmd

$dataSet = New-Object System.Data.DataSet

$sqlAdapter.Fill($dataSet)

Write-Host "Connecting to $DLMAutomationTargetDatabaseServer.$DLMAutomationTargetDatabaseName to get the active databases to deploy to"

$databasesToDeployTo = $dataSet.Tables[0]

$databaseCount = $databasesToDeployTo.Rows.Count

Write-Host "Found $databaseCount databases to deploy to."

$block = {

Param($record,

$packageExtractPath,

$projectId,

$releaseNumber,

$nugetPackageId,

$queryBatchTimeout,

$DLMAutomationTargetUsername,

$DLMAutomationTargetPassword)

$dlmDatabaseServer = $record.ServerName

$dlmDatabaseName = $record.DatabaseName

Write-Host "Deploying the release to the database $dlmDatabaseName on the server $dlmDatabaseServer"

$exportPath = Join-Path (Join-Path (Join-Path (Join-Path $packageExtractPath $projectId) $releaseNumber) $nugetPackageId) $dlmDatabaseName

# Create and test connection to the database.

$databaseConnection = New-DlmDatabaseConnection -ServerInstance $dlmDatabaseServer `

-Database $dlmDatabaseName `

-Username $DLMAutomationTargetUsername `

-Password $DLMAutomationTargetPassword

# Deploy the source schema to the target database.

Write-Host "Timeout = $queryBatchTimeout"

Import-DlmDatabaseRelease $exportPath | Use-DlmDatabaseRelease -DeployTo $databaseConnection -SkipPreUpdateSchemaCheck -SkipPostUpdateSchemaCheck -QueryBatchTimeout $queryBatchTimeout

}

Get-Job | Remove-Job

$maxThreads = 4

#Start the jobs. Max 4 jobs running simultaneously.

foreach($record in $databasesToDeployTo)

{

While ($(Get-Job -state running).count -ge $MaxThreads){

Start-Sleep -Milliseconds 3

}

Start-Job -Scriptblock $block -ArgumentList @($record, $packageExtractPath, $projectId, $releaseNumber, $nugetPackageId, $queryBatchTimeout, $DLMAutomationTargetUsername, $DLMAutomationTargetPassword)

}

#Wait for all jobs to finish

While ($(Get-Job -State Running).count -gt 0){

start-sleep 1

}

foreach($job in Get-Job){

$info= Receive-Job -Id ($job.Id)

Write-Host $info

}

Get-Job | Remove-Job

Conclusion

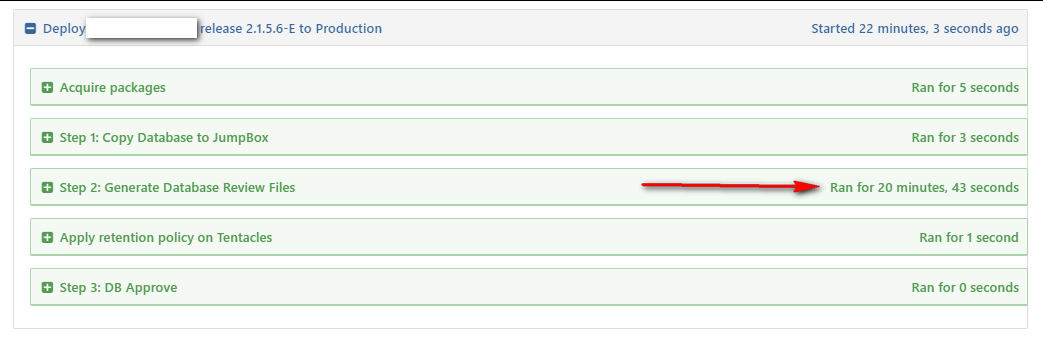

With four concurrent threads, it takes around 20 minutes to generate the database release files.

When I upped it to 8 threads, it took around 12 minutes. That seemed to be the sweet spot for us. All in all, it takes about 20 minutes to deploy to all the active databases. Much, much better than 2 hours, 12 minutes and 37 seconds. It almost passes my litmus test for deployments. Any deployment shouldn't take any longer than it takes to get up from your desk to use the facilities and get a cup of coffee.