Infrastructure: Setup Octopus Deploy to use Redgate DLM Automation

Automated database deployments is a critical aspect of any continuous integration pipeline. The tooling today has made it very easy to get that added, especially if you use Octopus Deploy and Redgate DLM Automation. It is very easy to add the necessary step templates into Octopus Deploy to get Redgate DLM automation to work. The part where I've stumbled in the past is getting the necessary infrastructure in place to get those step templates to work. I've had the chance to setup Redgate DLM Automation with Octopus Deploy several times, after some trial and error I have found an infrastructure configuration I like, is easy to maintain, and covers almost any scenario thrown at it.

How Redgate DLM Automation Deployments Work

Before getting started it is important to understand how the Redgate DLM Automation Deployment process works. This understanding will show why the deployment infrastructure is setup the way it is.

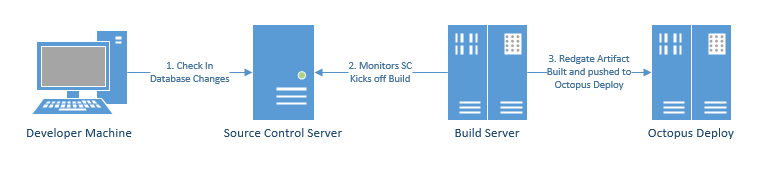

The CI Server (or build server), will generate an artifact using the Redgate DLM Automation build step. This artifact is the desired state of the database, it includes the entire schema definition of a database. That artifact is sent to Octopus Deploy to handle the deployment steps through each environment.

The deployment process will run SQL compare behind the scenes and generate a delta script for the destination database in the destination environment. The delta script will be unique per environment. This is often needed because of the drift between environments. A dev environment might have multiple builds before it is pushed to QA. Same for QA, it might have multiple builds before being pushed to production.

SQL Server Deployment Conundrum

Most SQL Servers I've run across are configured in a SQL Cluster, a High Availablity group, or some combination; the point is SQL Servers, in production, very rarely run on a single server. No one wants to go change a bunch of connection strings when a failover occurs to a secondary or backup server. This means applications are connecting using a virtual IP address. Very rarely would non-DBAs connect directly to the actual IP address of a SQL Server.

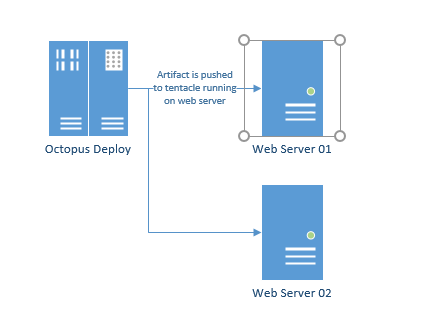

This presents an interesting question because Octopus Deploy's preferred method of doing work is to run tentacles on the machines where the artifacts will be deployed. For example, if you are deploying to a web server farm, a tentacle is installed on each server because the code needs to be deployed to each server.

That is not the case with SQL Server. Through replication and/or synchronization (depending on your setup) you only need to deploy the changes to a single server and the changes will be automatically replicated across to the other nodes.

In addition, DBAs tend to be cautious people. They really don't like additional software installed on their SQL Servers unless it is absolutely necessary. Any additional service or program running on the servers increases the potential for the server to crash and for the DBA to be paged in the middle of the night. This leads to some grumpy conversations with developers the next day (or in the middle of the night).

Infrastructure Configuration

When a website is deployed the artifact has to be deployed directly onto the server. With Redgate DLM Automation, all the work is done using a series of command line tools connecting to SQL Server using port 1433 and running a series of SQL commands. So the artifacts do not have to be actually copied directly to SQL Server.

A simple solution would be to configure the Redgate DLM Automation step templates in Octopus Deploy to run on the Octopus Deploy server. That leads to everyone's favorite topic, security. If Octopus Deploy is running on-premise and in an organization running active directory then the service will be running under a service account. In addition, some organizations make use of integrated security for SQL Server. Redgate recommends the account making the changes have DB Owner rights.

What that means is the service account running Octopus Deploy would need permissions to connect to SQL Servers with DB Owner permissions. For all environments. Unless you have a really relaxed DBA or one who isn't very security conscious, that will lead to an awkward conversation with perhaps some swear words.

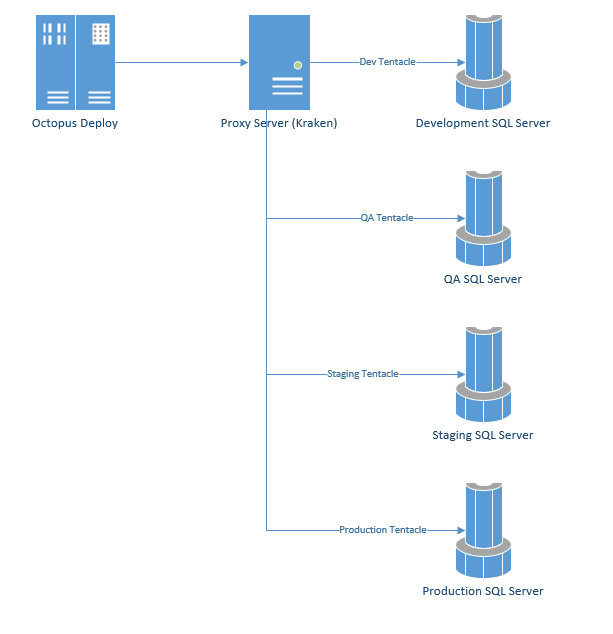

What I have found works best in a situation such as this is to create a unique service account for each environment. So if you have four environments, dev, QA, staging, production, you would have four service accounts. Then you install a tentacle on a proxy server for each of those service accounts. Again if you have four environments you would have four tentacles. Those service accounts have the necessary DB Owner permissions for the databases in their environment only.

Side Note: In all the cases when I set up this configuration I proposed including the name "kraken" in the server name somewhere.

The proxy server has multiple benefits:

- Increased Security -> Service account for each environment. If one gets compromised the other environments are fine.

- Separation of duties -> the proxy server is only used for SQL Server deployments while keeping that work off of SQL Server as well the Octopus Deploy Server. If one tentacle decides to crash the system it doesn't bring down SQL Server or Octopus deploy, it just stops database deployments.

- Intentional Bottleneck -> Only one database change per environment can be deployed at a time. It does provide a bit of a slow down at times, but I've run across several instances where having only one schema change occurring on a SQL Server was beneficial, especially if the schema change was a complex one which required the rebuilding of a table or index.

- Continue to use virtual IP addresses -> Takes advantage of the existing infrastructue setup for applications to use which means you don't have to worry about which SQL Server node to deploy to.

- Less Redgate DLM Activation -> in order for Redgate tooling to work past thirty days you have to activate it. Activation involves logging onto the server and running a command line. With this solution, you only have to activate it on one server instead of having to go to each SQL Server and activate it.

One downside is this creates a potential point of failure. The server is only used for one purpose, so the chances of that happening are slim. However, if that were to happen, it will only take a few moments to install a few tentacles and activate Redgate DLM Automation and you would be back in business. If you are really worried you could script that all out.

This same solution can be used if you are running SQL Server Security. The primary difference will be no service accounts (obviously), with the SQL Server Login and Password being stored as sensitive variables in Octopus Deploy. The Redgate DLM Automation Step Templates can accept SQL Server logins and passwords if none are provided then integrated security is used.

Conclusion

I've personally been involved with several dozen deployments to production using this configuration. The companies I've worked with where this configuration was used has had the deployment to production number reach into the thousands. Across all environments the number is probably in the 10s of thousands. I'm not trying to toot my own horn, besides this setup was a team effort, not just the work of a lone wolf. I'm bringing this up because I think having that many successful deployments are a testament to this configuration. It made the DBAs happy, it makes the developers happy. It is nice when a plan comes together.